So you want to build an AI agent? Here's what actually works

Let me tell you something surprising about AI agents. After watching dozens of companies pour resources into fancy frameworks and complex architectures, the teams that actually ship successful agents? They're the ones who kept things embarrassingly simple.

Anthropic just dropped some hard-earned wisdom from their work with enterprise customers, and honestly, it's refreshing to see someone say the quiet part out loud: you probably don't need that complicated agent framework you're eyeing. In fact, you might not need agents at all.

Step 1: Check if you actually need an agent

Here's the thing. Before you even think about building an agent, ask yourself: could a simple prompt solve this? Seriously.

Most problems don't need agents. They need well-crafted prompts with maybe some retrieval-augmented generation (RAG) thrown in. If your task has a clear input-output pattern and doesn't require multiple decision points, you're probably overengineering by reaching for agents.

Think of it this way: if you can flowchart the entire process without any "it depends" branches, you don't need an agent. You need a workflow.

Step 2: Understand the difference between workflows and agents

This distinction will save you months of development time, so pay attention.

Workflows are predetermined paths. Your LLM follows steps you've coded: first do A, then B, then C.

Perfect for:

Generating content, then translating it

Processing documents through approval stages

Running quality checks on code or content

Agents make their own decisions. They look at the situation and decide what to do next.

You'll need these when:

The number of steps is unpredictable

Each situation requires different tools or approaches

You can't possibly map every decision path

Choose workflows when you want predictability. Choose agents when you need flexibility. Most businesses? They need workflows with maybe a sprinkle of agent-like decision-making at key points.

Step 3: Start with the basic building block

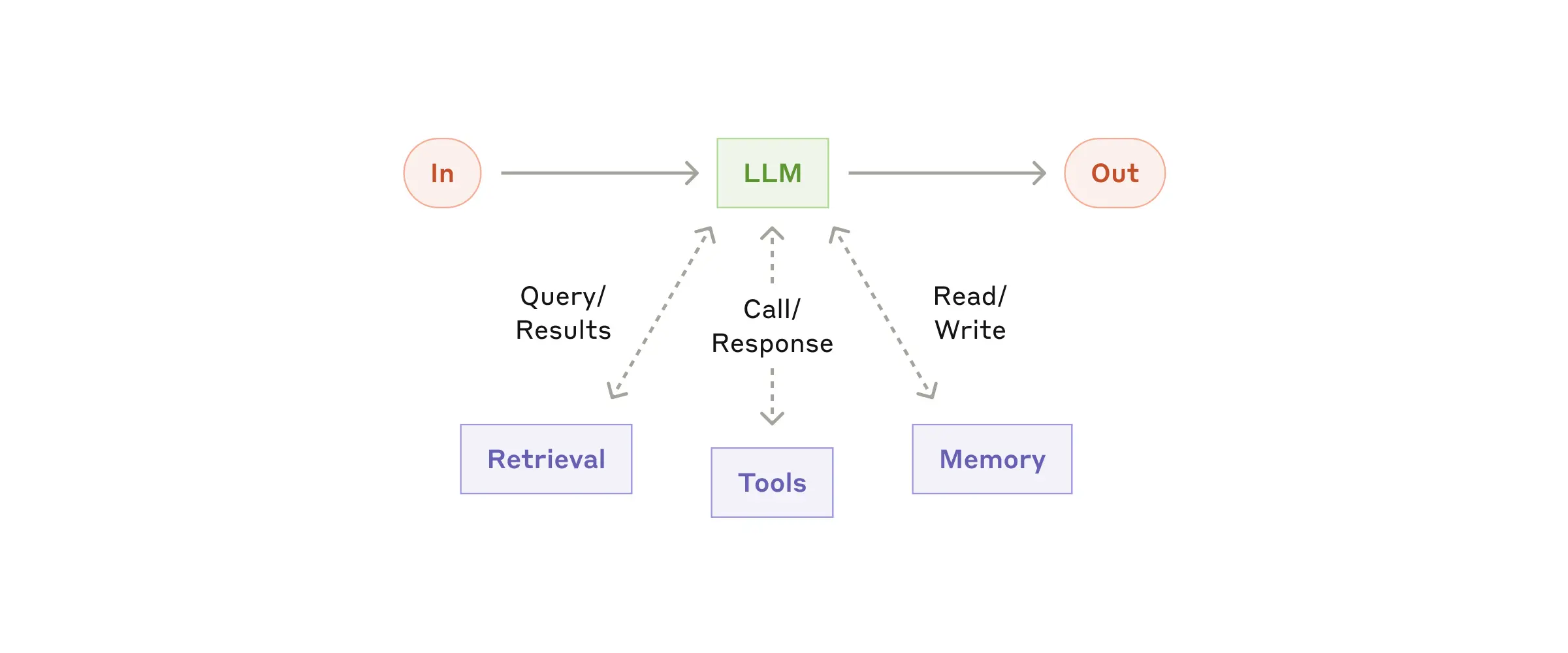

Every agentic system starts with what Anthropic calls an "augmented LLM." Before you do anything else, get this foundation right:

Set up tool access: Your LLM needs to interact with external systems. Start with 2-3 essential tools, not 20.

Add retrieval capabilities: Connect your knowledge base, documentation, or data sources. Make sure your LLM can find what it needs.

Implement memory (if needed): For conversations or multi-step processes, your system needs to remember previous interactions.

Get these three working reliably before moving on. I mean it. Resist the urge to add complexity until these basics are bulletproof.

Credit: anthropic.com

Step 4: Pick your pattern (start simple)

Now for the fun part. Choosing how to structure your system. Here are your options, from simplest to most complex:

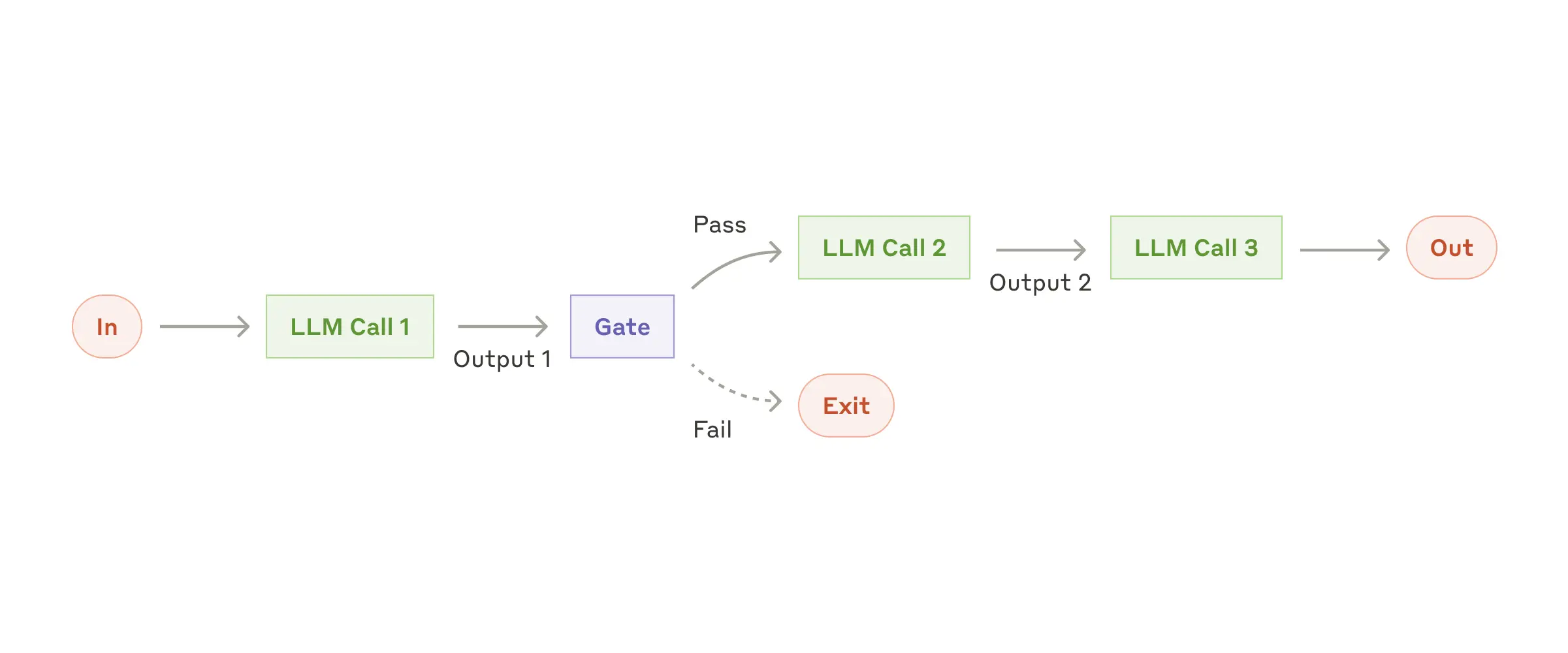

Prompt chaining (start here)

Break complex tasks into steps. Each LLM call handles one piece, passing results to the next.

How to implement it:

Map out your steps linearly

Add validation gates between steps

Build in error handling for each stage

Perfect for: Document creation, multi-stage analysis, any task you can break into clear phases

Credit: anthropic.com

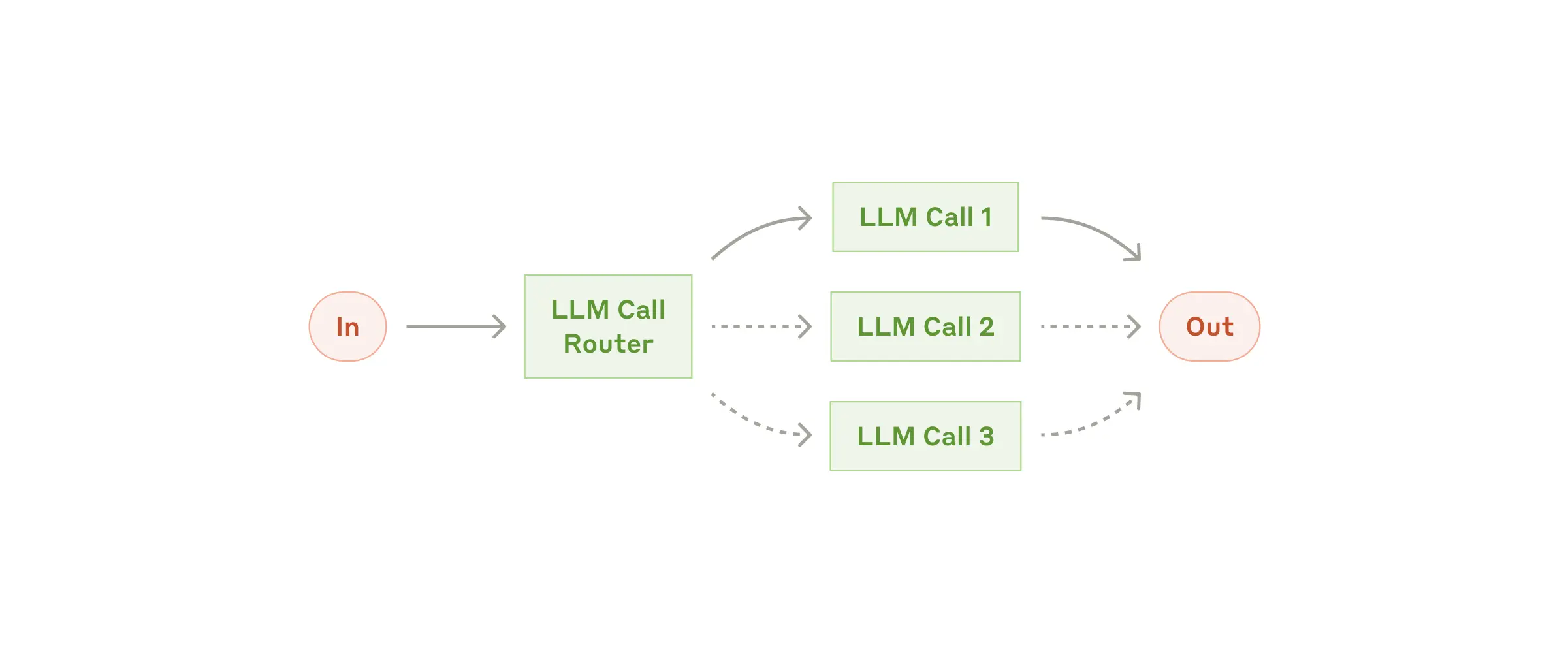

Routing

Direct different inputs to specialized handlers. Like a smart switchboard operator.

How to implement it:

Create a classifier (can be an LLM or traditional ML)

Build specialized prompts for each route

Test the classification extensively (this is where things usually break)

Perfect for: Customer service, technical support, any scenario with distinct categories

Credit: anthropic.com

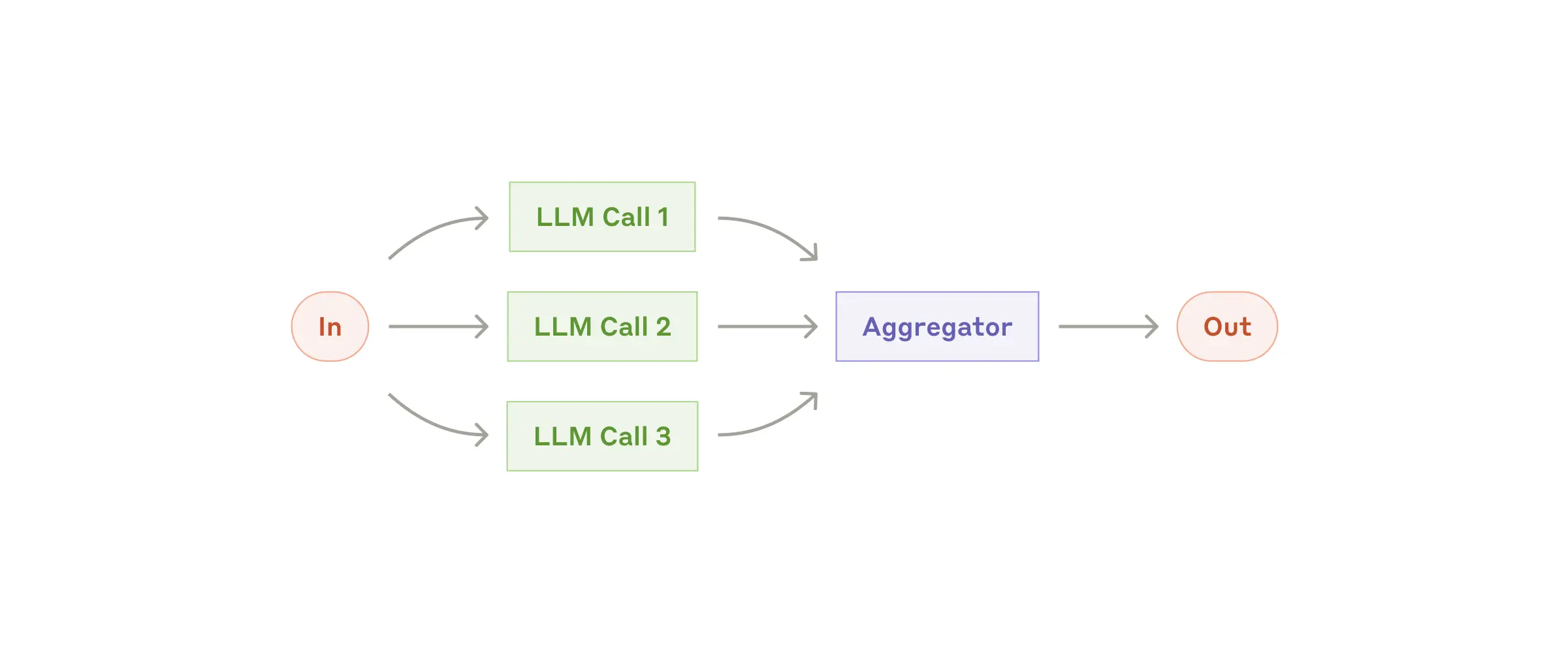

Parallelization

Run multiple operations simultaneously, then combine results.

How to implement it:

Identify independent subtasks

Set up parallel processing (sectioning) or multiple attempts (voting)

Design a smart aggregation method

Perfect for: Code review, content moderation, comprehensive analysis tasks

Credit: anthropic.com

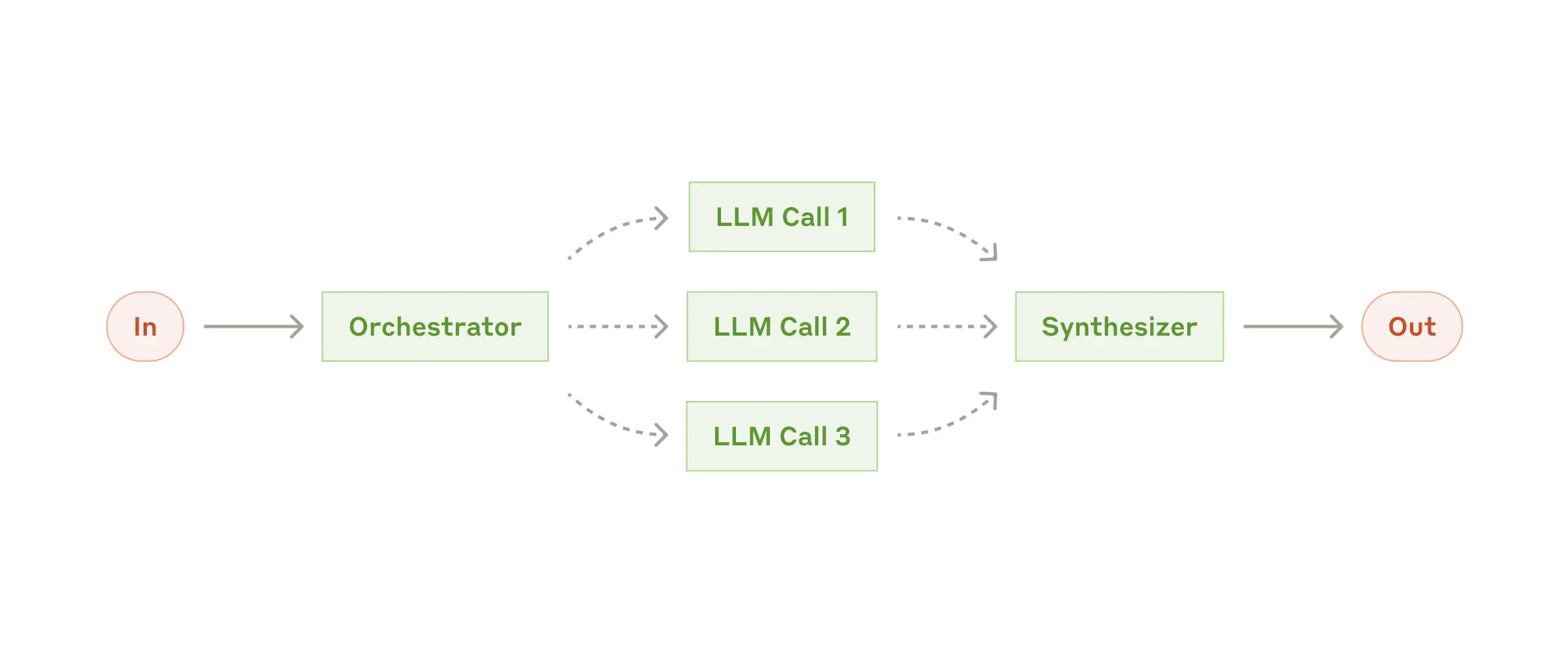

Orchestrator-workers

One LLM manages while others execute. Your orchestrator breaks down the problem and delegates.

How to implement it:

Build a strong orchestrator prompt that can decompose problems

Create specialized worker prompts

Implement clear communication protocols between them

Perfect for: Complex coding tasks, research projects, anything requiring dynamic subtask generation

Credit: anthropic.com

Step 5: Only then consider autonomous agents

If (and only if) the patterns above won't solve your problem, then look at building true autonomous agents. These systems need:

Clear success criteria: How will the agent know it's done?

Robust error recovery: What happens when things go wrong?

Stopping conditions: Maximum iterations, time limits, resource caps

Human checkpoints: Where should humans review or intervene?

Remember: agents are powerful but expensive. They can compound errors if not properly constrained. Test extensively in sandboxed environments first.

Step 6: Avoid the framework trap

I know LangGraph looks appealing. Those drag-and-drop builders seem like they'll save time. But here's your reality check:

Start by calling APIs directly. Most patterns need just a few lines of code. You'll understand exactly what's happening, debugging is straightforward, and you're not fighting through abstraction layers.

If you do use a framework:

Understand what it's doing under the hood

Be able to implement the same thing without it

Have a clear reason why the framework adds value

Step 7: Test, measure, iterate

Your first implementation will be wrong. That's fine. Everyone's is. Here's how to improve:

Start with a narrow use case: Don't try to boil the ocean

Measure everything: Response accuracy, latency, cost per interaction

Get user feedback early: Your metrics don't matter if users hate it

Iterate on one component at a time: Don't change everything at once

The implementation checklist

Before you write a single line of code:

Can this be solved with a simple prompt?

Do I need a workflow or an agent?

What's the simplest pattern that could work?

What are my success criteria?

How will I handle failures?

Where do humans need to stay in the loop?

The companies succeeding with AI agents aren't the ones with the fanciest tech stacks. They're the ones who started simple, measured everything, and only added complexity when it solved real problems.

Sometimes the best AI strategy isn't building an agent at all. And honestly? That's perfectly fine. The goal isn't to build agents. It's to solve problems. Keep that focus, and you'll be ahead of 90% of teams diving into this space.